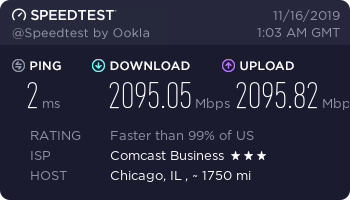

If you are following my articles here, you may have heard that I recently got Comcast Gigabit Pro and now have 2Gbps Internet at home.

Many have asked me how I am accomplishing this internally and what approach I have taken to getting multi-gigabit networking up and running in my house, so without further ado…

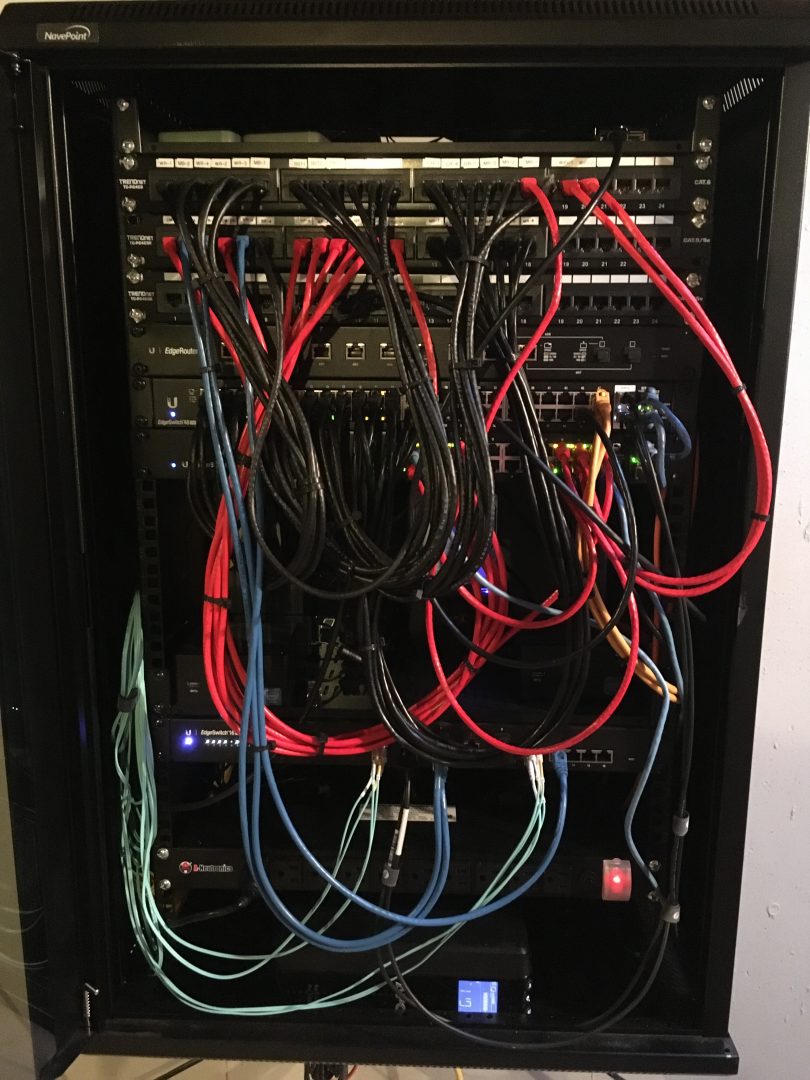

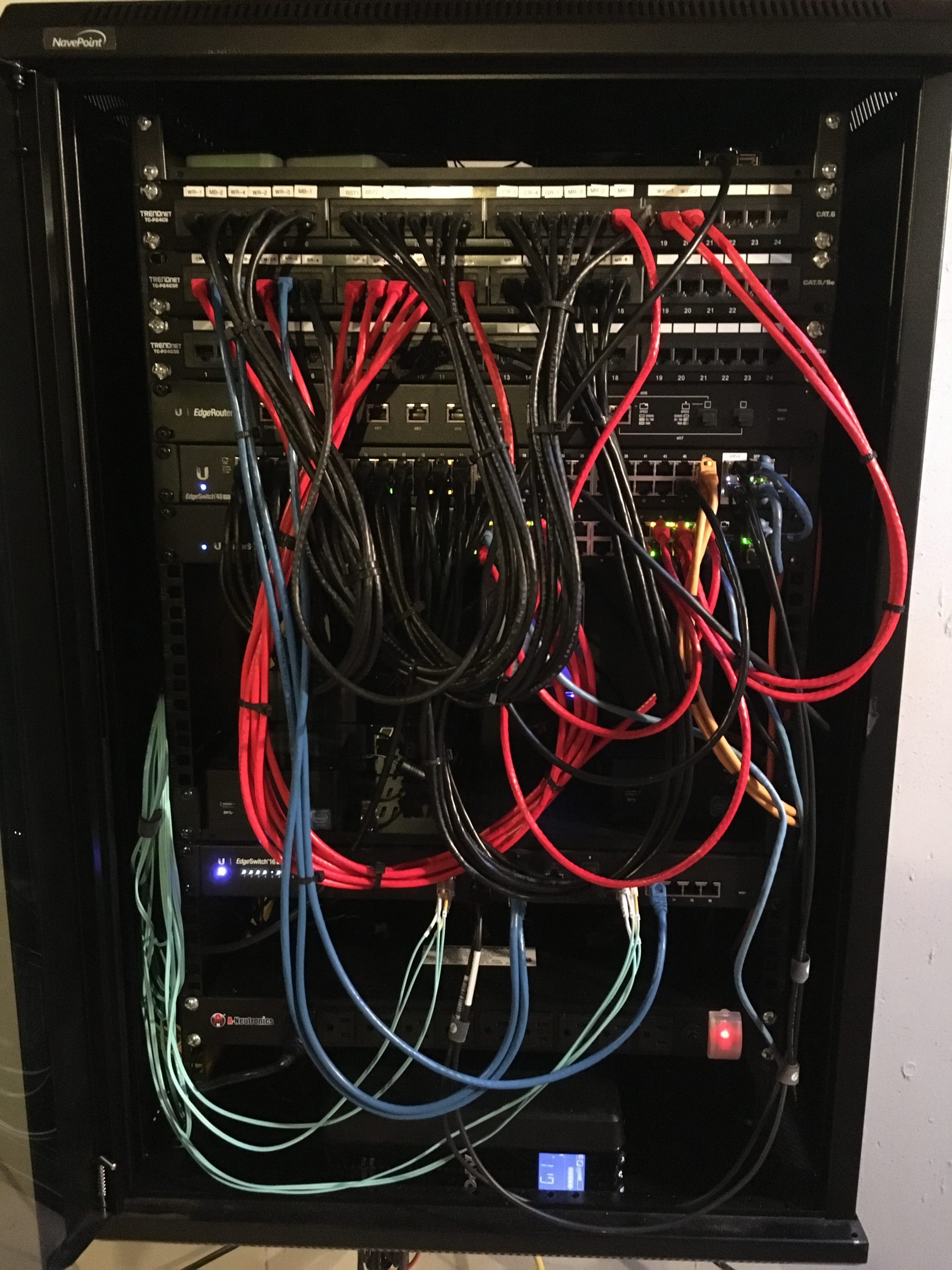

My Edge Network Cabinet

This cabinet is where my Internet connection enters the house from Comcast. The top two rack U’s are Comcast-owned equipment.

- Fiber Patch Panel – Where the Comcast fiber terminates inside my house.

- Juniper ACX2100 – Comcast’s fiber CPE, acting as just a switch to pass the handoff to me.

My equipment in here consists of:

- A PDU for nice fancy cable management. (UPS that powers this rack is located elsewhere).

- Ubiquiti EdgeRouter Infinity (ER-8-XG).

The EdgeRouter Infinity has 4 ports in use. I ran two 10G fiber connections to my core networking cabinet, for reasons I will explain later. There is one 10G fiber connection to the Juniper and one 1G ethernet connection to the Juniper.

This is because the Comcast Gigabit Pro service provides two circuits, a 2Gbps fiber and a 1Gbps ethernet with different IPs. The EdgeRouter Infinity is my termination point for both of these connections and it holds both public IPs.

I use Policy Based Routing to direct traffic to the desired connection. Currently, I route only my DNS resolvers, VoIP, and Twitch RTMP (broadcasting) out my single gigabit ethernet connection. I also have an off-site video recording solution for my security cameras which I have routed out this connection. This way those critical, and fairly “consistent” usage, services will not be degraded by surges of traffic on the fiber line. This is completely unnecessary but it seemed like a good use of the extra capacity to me. At any time I can add an IP to my NAT group that I set up and route it out the connection of my choice.

My Core Network Cabinet

My core network cabinet is the next step, and main distribution panel for Internet and network connectivity in my house.

It contains the following equipment:

- 2 Obihai Obi200 VoIP adapters for my two Google Voice lines.

- Raspberry Pi for Bonjour relay between VLANs. (Helps the Chromecasts work as expected across my multiple VLANs.)

- 3 CAT6 patch panels for ethernet distribution. The cables on the other sides of these panels go to wall jacks and installed mounted equipment throughout my house.

- My old EdgeRouter ERPro-8 that I haven’t deracked yet, I used to use this as my core router for my cable Internet lines.

- 48 Port Ubiquiti EdgeSwitch (ES-48-Lite), for 1Gbps ethernet distribution to general purpose wall jacks.

- 24 Port Ubiquiti EdgeSwitch (ES24-500W), for 1Gbps POE ethernet distribution to IP cameras and wireless access points.

- Several small project computers (similar to NUCs) for seasonal projects.

- Ubiquiti EdgeSwitch 16-XG (ES-16-XG), for 10Gbps network distribution.

- PDU for fancy cable management.

- UPS that powers both this and the Edge cabinet next to it.

The bread and butter in this cabinet is the ES-16-XG which acts as a core 10Gbps distribution switch for the rest of my network.

While I do have two separate physical networks for server rack and residential network, separated by VLANs, there is a 2x10Gbps fiber connection going to my server rack from the ES-16-XG, which provides inter-vlan connectivity between my servers and the rest of my network.

Additionally, there is a 2x10Gbps uplink to the 48 port switch. The POE switch does not support 10G, so it has a 2x1Gbps uplink to the 48 port switch. This means the theoretical maximum throughput on my WiFi would be 2Gbps, but that is also the maximum speed of the fiber drop that I am using as my primary Internet connection, so this is acceptable to me.

10Gbps to My Computers

The remaining 10G ports on the ES-16-XG can be used for 10Gbps network connectivity of other devices. I have a total of 3 computers on the second floor of my house that are connected with 10Gbps currently.

- My main Linux workstation.

- My gaming PC.

- My DJ studio computer where I broadcast to Twitch.

This can be accomplished by using the 4 RJ45 ports that come on the ES-16-XG, but I didn’t want to be limited to 4 ports, so I also am using SFP-10G-T transceivers from FiberStore, they are currently selling for $59 new.

I haven’t really encountered any significant difference between this and the onboard 10G ethernet ports. I plan to continue to use these to expand the 10G connectivity as needed.

I have no problems running 10G over my existing CAT6 wiring in my house that I ran 4 years ago. I am able to get about 9.6Gbps on iperf from the second floor computers to the server rack in the basement.

To accomplish this on the computers upstairs, I am using Intel X540-T2 network cards I bought on eBay, they are currently going for about $130 used.

So, it is somewhat costly to get 10G to a computer upstairs via ethernet, but within my price range and working just as reliably as standard 1Gbps ethernet for me so far.

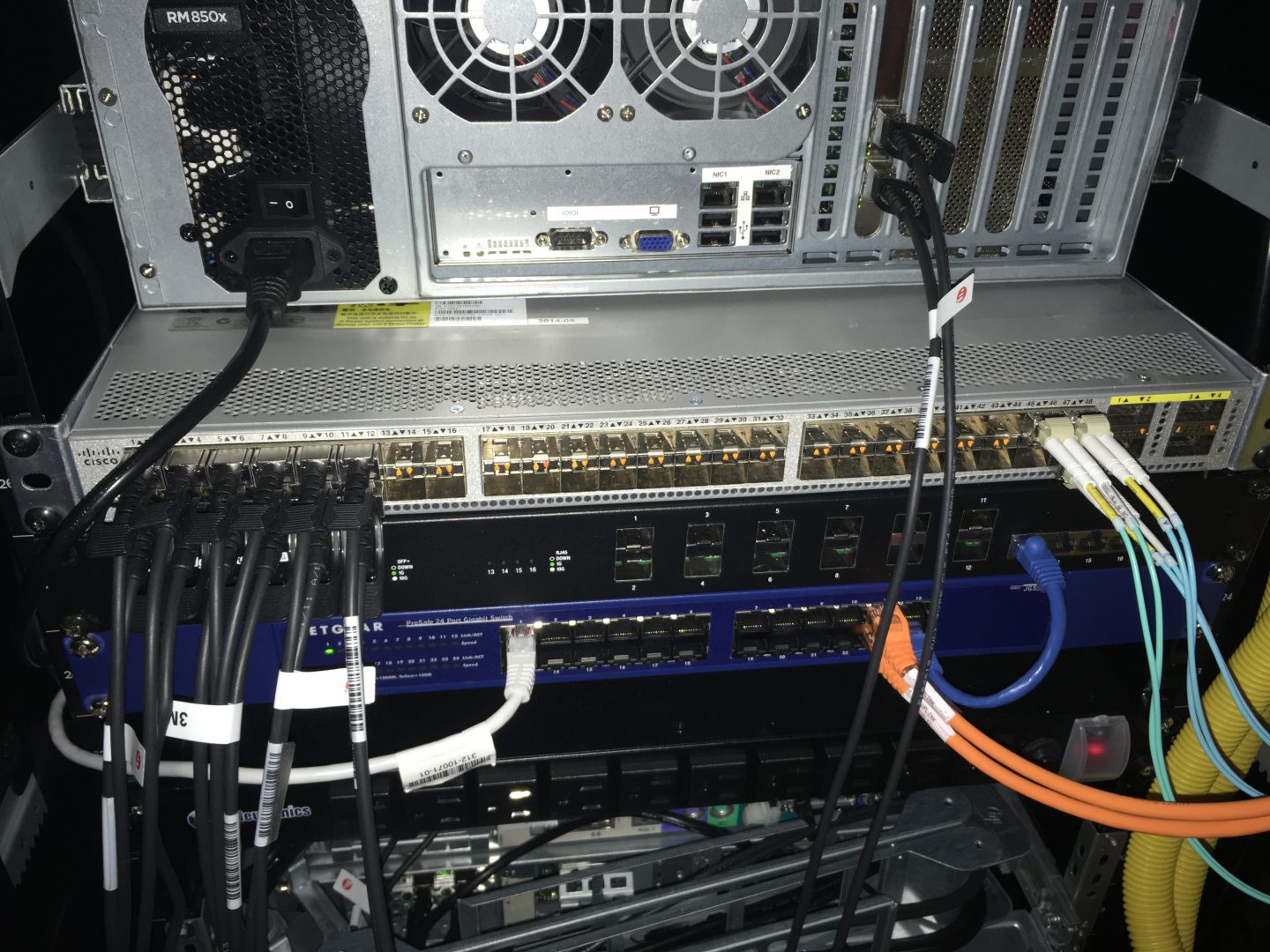

10Gbps to My Servers

For my server rack, I am using a Cisco Nexus 3064-X (N3K-C3064PQ-10GX) as the core switch.

This was obtained on eBay for under $400, and actually was cheaper than the EdgeSwitch (because I bought it used), but not ideal for a core residential switch because it is to deep for my wall mount network cabinet. For the server rack though, it works great and provides 48 10G ports (and 4x 40G ports).

As mentioned earlier, it has 2x10G fiber uplinks to my residential network, and also has its own 10G uplink to the EdgeRouter. These are all fiber links.

For the servers themselves, I use direct attach copper cables like this. This seems to be the cheapest way to do 10G over short distance and was ideal for my single server cabinet.

In a number of my servers, I am using Dell Mellanox CX332A cards, but I did run into some motherboard compatibility issues and also have two servers that are using Intel X520-DA2 cards, because for some reason even after BIOS updates and other troubleshooting I could not get the Mellanox cards working in all of my systems.

I do not notice any difference between the different 10G cards or media… DAC, ethernet, fiber – it has not made any difference that I have noticed and all performs well.

My server rack contains several storage servers which I have connected two DAC cables (2x10G) and bonded them.

For my other servers, hypervisors\compute, I have 2x10G as well but I have it set up as “public” and “private”, where the public port has a routable IP that can lead to the Internet, and the private port has a non-routable IP on a separate VLAN that is used for inter-server communications, mostly accessing NFS mounts on the storage servers.

In practice 10G is well beyond what I need, so this is still over-built for my purposes. Prior to upgrading my Internet, I was doing the same with 1G ethernet, and actually did not even have the storage servers bonded.

As you might imagine, my growing rack of server equipment consumes some serious power, so to that end, while I was having some electrical work recently I had a dedicated 120V 20A circuit installed for the rack. I also had a second one installed for future expansion.

What Am I Doing With All This Capacity?

There is no doubt that I still have much more bandwidth and capacity than I could ever use — and I am a heavy power user on a good day.

Having this kind of network and connectivity to the Internet has allowed me to free myself from any worry of excessive bandwidth usage. I no longer need ask if I can attempt a project due to bandwidth limitations.

The greatest limitation to me now tends to be external services. VPNs, servers, and connections outside of my control. Maxing out my Internet is basically impossible, even when downloading games from Steam (which is probably the application that comes the closest to doing it).

The best thing for me to come out of this connection is the freedom to use it however I want without problems. I need not worry about backup scripts running while I’m gaming, or Steam downloads choking out my Twitch streams.

My peak usage amounts that actually show up on my graphs are typically still under 1Gbps, and in many cases under 100Mbps, but the ability to do anything anytime without fear of choking due to node congestion or RF interference of cable service is very freeing.

The service is expensive, but for me it’s money well spent.

Recent Comments