Recently, I decided to begin the process of retiring my Ubiquiti EdgeRouter Infinity, for a number of reasons, including the fact that I don’t have a spare and the availability and pricing of these routers has only gotten worse with each passing year. I wanted to replace this setup with something that could be more easily swapped in the event of a failure, and having been a former PFSense (and even former Monowall) user years ago, I decided to give OPNsense a try.

I ordered some equipment which provided a good compromise between enterprise grade, lots of PCIe slots, cost, and power efficiency. I ended up building a system with an E5-2650L v3 processor and 64GB of RAM. I decided to start by installing Proxmox, allowing me to make this into a hub for network services in the future rather than just a router. Afterall, I have a Proxmox cluster in my server rack, Proxmox VM’s are easy to backup and restore, and even inside of virtual machines, I have always found the multi-gig networking to be highly performant. This all changed when I installed OPNsense.

Earlier this year, my Internet was upgraded to 6Gbps (7Gbps aggregate between my two hand-offs). This actually was another factor in my decision to go back to using a computer as a routerI, there are rumors of upgrades to 10Gbps and beyond in the pipeline, and I want to be prepared in the future with a system that will allow me to swap in any network hardware I want.

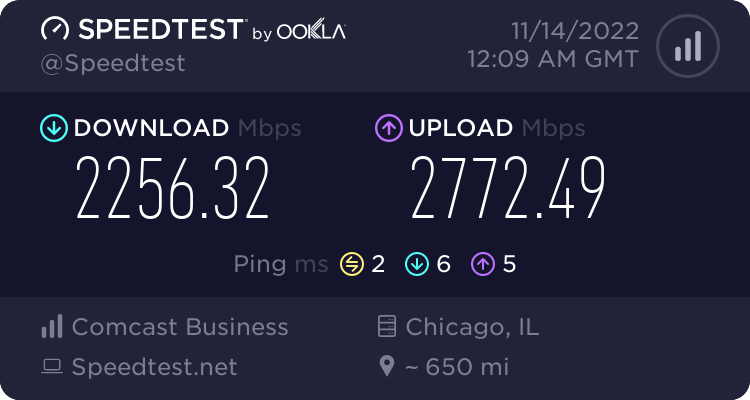

I’d assumed that modern router software like this should have no problem handling multi-gigabit connectivity, especially on such a powerful system (I mean I built an E5 server…), but after installing OPNsense in my Proxmox VM and trying to use it on my super fast connection, I was instantly disappointed. Out of the box, the best I could do was 2-3Gbps (about half of my speed).

Through the course of my testing, I realized that even testing with iperf from my OPNsense VM to other computers on my local network, the speeds were just as bad. So why was OPNsense only capable of using about 25% of a 10Gbps network connection? I spent several days combing through articles and forum threads trying to determine just that, and now I am compiling my findings for future reference. Hopefully some of you reading this will now save some time.

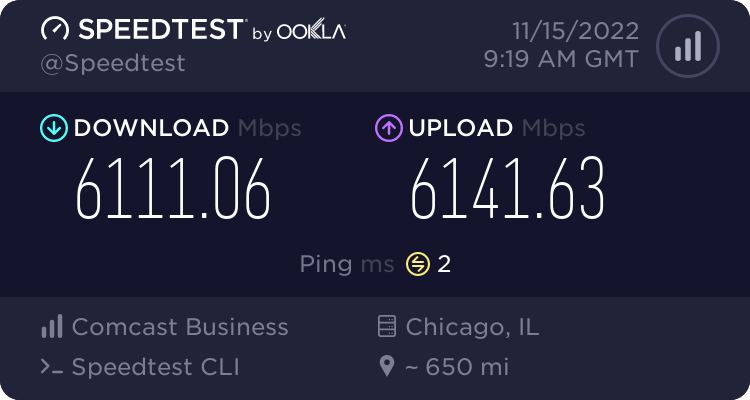

I did eventually solve my throughput issues, and I’m back to my full connection speed.

Ruling out hardware issues…

I know from my other hypervisor builds that Proxmox is more than capable of maxing out a 10Gbps line rate with virtual machines… and my new hypervisor was equipped with Intel X520-DA2I cards, which I know have given me no issues in the past.

Just to rule out any issues with this hardware I’d assembled, I created a Debian 11 VM attached to the same virtual interfaces and did some iperf testing. I found that the Debian VM had no problems performing as expected out of the box, giving me about 9.6Gbps on my iperf testing on my LAN.

Proxmox virtual networking issues in OPNsense\FreeBSD?

Throughout the course of my research, I found out to my dismay that FreeBSD seemed to have a history of performance issues when it comes to virtual network adapters – not just Proxmox, but VMWare as well.

Some sources seemed to suggest that VirtIO had major driver issues in FreeBSD 11 or 12 and I should be using E1000. Some sources seemed to suggest that VirtIO drivers should be fixed in the release I was using (which was based on FreeBSD 13).

I tested each virtual network adapter type offered in the Proxmox interface: VirtIO, E1000, Realtek RTL8139, and VMWare vmxnet3.

Out of the box with no performance tuning, VirtIO actually performed the best for me by far. None of the other network adapter types were even able to achieve 1Gbps. VirtIO was giving me about 2.5Gbps. So, I decided to proceed under the assumption that VirtIO was the right thing to use, and maybe I just needed to do some additional tuning.

Throughout the course of my testing, I also tested using the “host” CPU type versus KVM64. To my great shock, KVM64 actually seemed like it performed better, so I decided to leave this default in place. I did add the AES flag (because I am doing a lot of VPN stuff on my router, so might as well) and I did decide to add the Numa flag, although I don’t think this added any performance boost.

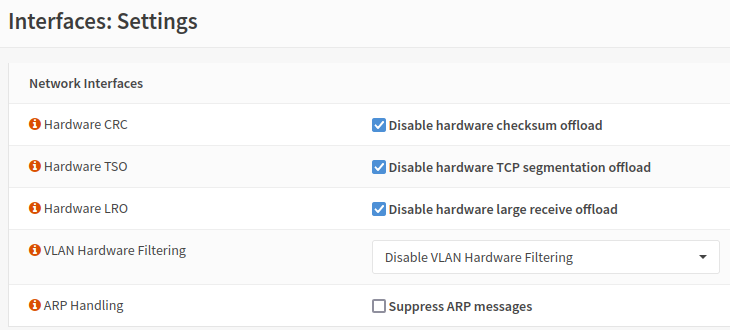

OPNsense Interface Settings, hardware offload good or bad?

It seems like the general consensus is, somewhat counter intuitively, that you should not enable Hardware TSO or Hardware LRO on a firewall appliance.

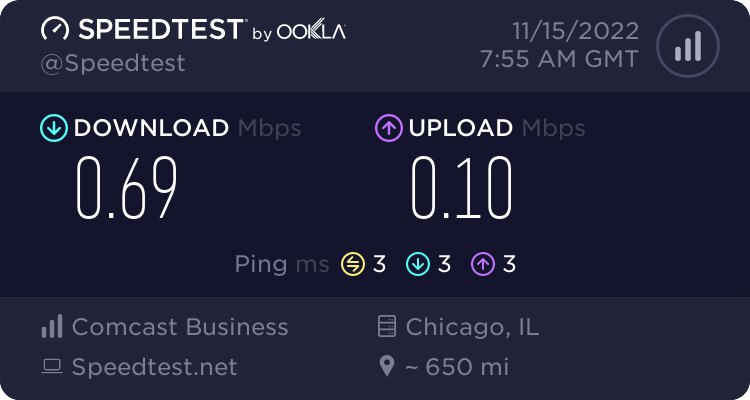

I tried each one of these interface settings individually, and occasionally I saw some performance gains (Hardware LRO gave me a noticeable performance boost), but some of the settings also tremendously damaged performance. The network was so slow with Hardware VLAN filtering turned on that I couldn’t even access the web UI reliably. I had to manually edit /conf/config.xml from the console to get back into the firewall.

I experienced some very strange issues with the hardware offloading. In some situations, the hardware offloading would help the LAN side perform significantly better, but the performance on the WAN side would take a nosedive. (I’m talking, 8Gbps iperf to the LAN, coinciding with less than 1Mbps of Internet throughput).

As a result of all these strange results, I later decided that the right move was to leave all of this hardware offloading turned off. In the end, I was able to achieve the above performance without any of it enabled.

OPNsense\FreeBSD, inefficient default sysctl tunables?

My journey into deeper sysctl tuning on FreeBSD began with this 11 page forum thread from 2020 from someone who seemed to be having the same problem as me. Other users were weighing in, echoing my experiences, all equally confused as to how OPNsense could be performing so poorly, with mostly disinterested responses from any staff weighing in on the topic.

It was through the forums that I stumbled on this very popular and well respected guide for FreeBSD network performance tuning. I combed over all of the writing in this guide, ignoring all of the ZFS stuff and DDoS mitigation stuff, focusing on the aspects of the write-up that aimed to improve network performance.

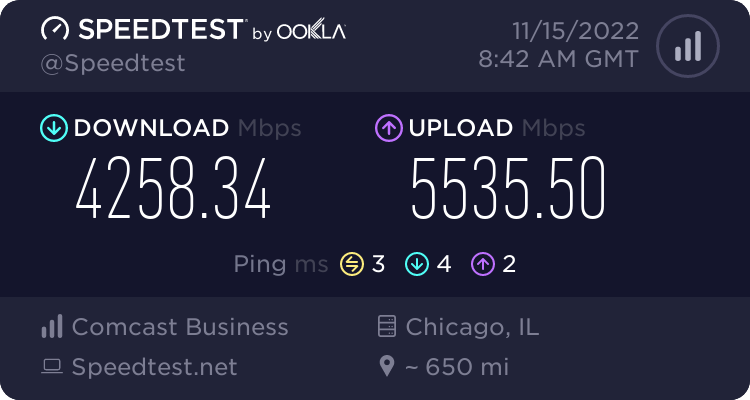

After making these adjustments, I did see a notable improvement, I was now able to achieve about 4-5Gbps through the OPNsense firewall! But, my full Internet speed was still slightly eluding me, and I knew there had to be more that I could do to improve the performance.

I ended up reading through several other posts and discussions, such as this thread on Github, this thread on the OPNsense forum about receive side scaling, the performance tuning guide for PFsense, a similar FreeBSD based firewall solution from which OPNsense was forked, a very outdated thread from 2011 about a similar issue on PFsense, and a 2 year old Reddit thread on /r/OPNsenseFirewall about the same issue.

Each resource I read through listed one or two other tunables which seemed to be the silver bullet for them. I kept changing things one at a time, and rebooting my firewall. I didn’t keep that great of track of which things made an impact and which didn’t, because as I read what each thing was, I generally agreed that “yeah increasing this seems like a good idea”, and decided to keep even modifications that didn’t seem to make a noticeable performance improvement.

Perhaps you are in a position where you want to do more testing and narrow down which sysctl values matter for your particular setup, but I offer this as my known working configuration that resolved the speed issues for me, and which I am satisfied with. I have other projects to move on to and have spent more than enough time on this firewall one, it’s time to accept my performance gains and move on.

Configuration changes I decided to keep in my “known good” configuration.

If you haven’t enjoyed my rambling journey above of how I got here, then this is the part of this guide you’re looking for. Below are all of the configuration changes I decided to keep on my production firewall, the configuration which yielded the above speed test exceeding 6Gbps.

If you’re doing what I’m doing, you’re sitting with a default OPNsense installation inside of a Proxmox virtual machine, here’s everything to change to get to the destination I arrived at.

Proxmox Virtual Machine Hardware Settings – Machine Type

I read conflicting information online about whether q35 or i440fx performed better with OPNsense. In the end, I ended up sticking with the default i440fx. I didn’t notice any huge performance swing one way or another.

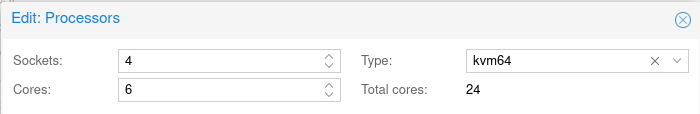

Proxmox Virtual Machine Hardware Settings – CPU

- Leave the CPU type as “KVM64” (default). This seemed to provide the best performance in my testing.

- I matched the total core count with my physical hypervisor CPU, since this will be primarily a router and I want the router to have the ability to use the full CPU.

- I checked “Enable NUMA” (but I don’t think this improved performance any).

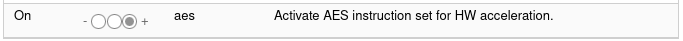

- I enabled the AES CPU flag, with the hope that it might improve my VPN performance, but I didn’t test if it did. I know it shouldn’t hurt.

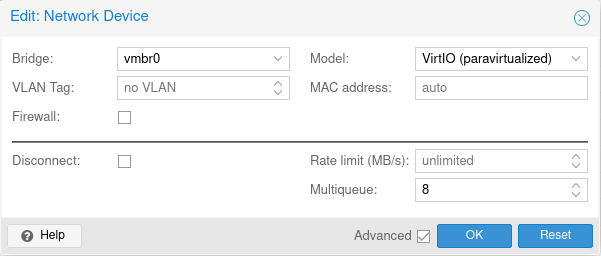

Proxmox Virtual Machine Hardware Settings – Network Adapters

- Disable the Firewall checkbox. There is no need for Proxmox to do any firewall processing, we’re going to do all our firewall work on OPNsense anyway.

- Use the VirtIO network device type. This provided the best performance in my testing.

- Set the Multiqueue setting to 8. Currently, 8 is the maximum value for this setting. This provides additional parallel processing for the network adapter.

OPNsense Interface Settings

The first and most obvious settings to tinker with were the ones in Interfaces > Settings in OPNsense. As I wrote above, these provided mixed results for me and were not very predictable. In the end, after extensively testing each option one by one, I decided to leave all the hardware offloading turned off.

OPNsense Tunables (sysctl)

After testing a number of tunable options (some in bulk, and some individually), I arrived at this combination of settings which worked well for me.

These can probably be adjusted in configuration files if you like, but I did it through the web UI. After changing these values, it’s a good idea to reboot the firewall entirely, as some of the values are applied only at boot time.

The best overall guide which got me the most information was this FreeBSD Network Performance Tuning guide I linked above. I’m not going to go into as much detail here, and not everything set below was from this guide, but it was a great jumping off point for me.

hw.ibrs_disable=1

This is a CPU related tunable to mitigate a Spectre V2 vulnerability. A lot of people suggested that disabling it was helpful for performance.

net.isr.maxthreads=-1

This uncaps the amount of CPU’s which can be used for netisr processing. By default this aspect of the network stack on FreeBSD seems to be single threaded. This value of -1 for me resulted in 24 threads spawning (for my 24 CPU’s).

net.isr.bindthreads = 1

This binds each of the ISR threads to 1 CPU core, which makes sense to do since we are launching one per core. I’d guess that doing this will reduce interrupts.

net.isr.dispatch = deferred

Per this Github thread I linked earlier, it seems that changing this tunable to “deferred” or “hybrid” is required to make the other net.isr tunables do anything meaningful. So, I set mine to deferred.

net.inet.rss.enabled = 1

I decided to enable Receive Side Scaling. This didn’t come from the tuning guide either, it came from an OPNsense forum thread I linked earlier. In a nutshell, RSS is another feature to improve parallel processing of network traffic on multi-core systems.

net.inet.rss.bits = 6

This is a receive side scaling tunable from the same forum thread. I set it to 6 as it seems the optimal value is CPU cores divided by 4. I have 24 cores, so 24/4=6. Your value should be based on the number of CPU cores on your OPNsense virtual machine.

kern.ipc.maxsockbuf = 614400000

I grabbed this from the FreeBSD Network Performance Tuning Guide, this was their recommended value for if you have 100Gbps network adapters. The default value that came shipped with my OPNsense installation corresponded with the guide’s value for 2Gbps networking. I decided since I may want to expand in the future, I would increase this to this absurd level so I don’t have to deal with this again. You may want to set a more rational value, 16777216 should work for 10Gbps. The guide linked above goes into what this value does and other values it effects in great detail.

net.inet.tcp.recvbuf_max=4194304

net.inet.tcp.recvspace=65536

net.inet.tcp.sendbuf_inc=65536

net.inet.tcp.sendbuf_max=4194304

net.inet.tcp.sendspace=65536

These TCP buffer settings were taken from the FreeBSD Network Performance Tuning Guide, I didn’t look into them too deeply but they were all equivalent or larger buffers than what came shipped on OPNsense, so I rolled with it. The guide explains more about how these values can help improve performance.

net.inet.tcp.soreceive_stream = 1

Also from the tuning guide, this enables an optimized kernel socket interface which can significantly reduce the CPU impact of fast TCP streams.

net.pf.source_nodes_hashsize = 1048576

I grabbed this from the tuning guide as well, it likely didn’t help with my problem today, but it may prevent problems in the future. This increases the PF firewall hash table size to allow more connections in the table before performance deteriorates.

net.inet.tcp.mssdflt=1240

net.inet.tcp.abc_l_var=52

I grabbed these values from the tuning guide which are intended to improve efficiency while processing IP fragments. There are slightly more aggressive values you can set here too, but it seems these are the more safe values, so I went with them.

net.inet.tcp.minmss = 536

Another tuning guide value which I didn’t look into too heavily, but it configures the minimum segment size, or smallest payload of data which a single IPv4 TCP segment will agree to transmit, aimed at improving efficiency.

kern.random.fortuna.minpoolsize=128

This isn’t related to the network at all, but it was a value recommended by the tuning guide to improve the RNG entropy pool. Since I am doing VPN stuff on this system, I figure more RNG is better.

net.isr.defaultqlimit=2048

This value originated from my earlier linked Reddit thread, it was quickly added during the last batch of tunables that finally pushed me over the edge in terms of performance, and I decided I’d leave it even if it wasn’t doing anything meaningful. Increasing queuing values seems to have been a theme of the tuning overall.

Good enough for now!

With all of the above changes, I achieved my desired performance with OPNsense, running in a KVM virtual machine on Proxmox.

I’d imagine that these same concepts would apply well to any FreeBSD based router solution, such as PFsense, and some could even apply to other FreeBSD based solutions common in homelab environments, such as FreeNAS. However, it appears in my research that OPNsense is unique limited in its performance (more limited than stock FreeBSD 13). So, your mileage may vary.

The above is not intended to be a comprehensive guide, I write it both for my future reference, and with the hopes that some of the many folks who seem to be out there having these same performance issues, and being forced to stumble around in the dark looking for answers like I was, might try the settings in my guide and achieve the same great outcome.

Thank you so much for providing this info. I just wanted some kind of guide, even a baseline guide, to set something like this up and kept coming up short. As of a few weeks ago, put together a new OPNsense box using an R86S as a hypervisor after recommendation from servethehome https://www.servethehome.com/the-gowin-r86s-revolution-low-power-2-5gbe-and-10gbe-intel-nvidia/

I’ve dealt with router customizations in the past with OpenWRT and modding old WRT54G routers, but being in managed business IT, I got my hands on some higher end hardware for the time, like some Meraki products and such. Those were doing fine until I got this stupid awesome deal from my current ISP to run fiber direct to my apartment with speeds “up to” 10GBps. So this is my first jump into a full custom appliance build, and why not run it virtualized? If I’m going to get wet, may as well dive into the deep end.

Managed testing with OPNsense running on Proxmox, absolute bare bones (HV is only running OPNsense right now) and can only get 2GBps direct to the router with pretty much the same hardware virtualization config (no optimizations yet) and have been really nervous that the hardware just isn’t up to the task.

I’m going to set up my config outlined here and then work backwards if something’s off. I’m a little nervous about overprovisioning hardware assets to a VM, but that may not be that big of an issue with Proxmox, I don’t know. I’m used to dealing with ESXi and HyperV, proxmox is pretty new to me.

One of the biggest problems I run into isn’t so much bandwidth, but stability. Playing games with friends, especially when hosting (on a separate box), there’s just a lot of quirky behavior and major network problems. Not hosting, better on their end, but I appear noticeably laggy to them and I do experience issues as well. For a pipe like this, the only thing difference is this new appliance, so I’m doing something wrong for sure.

I also notice some degraded performance with VOIP calls and random hangups in apps like Youtube on media boxes. They work, but sometimes, not always, they’ll hang for about 10-15 seconds before starting. The CPU on this device is not fantastic by any means, but I feel like it should be enough to handle this if allocated properly.

If you have discovered anything new since you posted this, I’d love to hear what you did if gained any performance improvements.

Hmmm, I have had this setup running on my connection since my write-up, and I haven’t noticed any of the performance or stability issues you’ve described, that’s very interesting and strange. Some of your VoIP symptoms almost sound like they are a DNS resolution delay, or a problem with a NAT traversal system the VoIP system depends on (like UPNP, STUN, TURN) – these type of things might make sense for why a delay before establishing a call could occur. I have two ObiHai Obi200 Google Voice lines here running behind my virtual OPNsense and I have never experienced anything like that with them.

Strange for sure. I ultimately had to jump back to using my old Netgear R7000 for doing WAN routing. It’s not utilizing the higher throughput at just 1GBps (“just”) but it’s consistent and immediately noticeable when I took the R86S off the network. When using OPNsense, I had the local network DNS set to OPNsense through Unbound and for DNS queries out to the broader internet, I was using Adguard.

I can see how hiccups with DNS routing could cause delays in VoIP and other strange behavior, but DNS should be out of the picture once connection is established in a scenario like hosting a game server. Should just be straight IP to IP at that point, yeah?

Anyhow, my next plan is to put the R86S back on the network and have it just be a DNS server (through ADguard) and DHCP server and the Netgear as the gateway only, so I still get a few of the benefits of having tighter control over the network, but not deal with the instability while I play around with OPNsense some more.

I did configure OPNsense with your suggested settings and didn’t see an improvement in throughput or stabilization. I’m really leaning toward this being an issue with the hardware, not really OPNsense or the configuration. It was really bare bones, no VLANs or special routing policies, and no VPN involved. Tried configuring a QoS policy for just the box hosting my softphone application, but pretty sure I didn’t do that right and the whole network was on the fritz before I could test it much longer.

Add to that, OPNsense would seize up periodically, sometimes the whole host, with Proxmox not even allowing for a soft shutdown which would bring my whole network down, and it just feels like the hardware is the problem.

I started using a OPNSense router from an I9-9900k with a dual 10GBE SFP NIC, since my ASUS router couldn’t handle my 5gbit fiber speeds.

I prematurely applied all these tunables to the installation, and had terrible performance.

After reverting all the tunables to default it managed 5GBIT symmetric with no customization at all.

Overall, very interesting, but potentially not necessary at all depending on your hardware!

Very interesting indeed, perhaps these tweaks have more to do with the fact it is a virtualized environment. These settings are still rock solid for me, but as mentioned my OPNsense instance is running in a Proxmox VM.

Pingback: How to Install OPNSense firewall on PROXMOX hypervisor? - windgate

This seems like a somewhat spammy comment, but I’ll allow it as it does provide a legitimate link to a somewhat on-topic article.

https://windgate.net/how-to-install-opnsense-on-proxmox/

Have you tried install opnsense baremetal on R86S ? I am eyeing the exact unit!

Nope, I don’t have one of those, but looks like a cool idea.

Running OPNsense bare metal on the R86S now. Virtualizing really wasn’t good. It worked, but would crash after a few days. I think I was asking too much out of it even though on paper it would seem it could mostly handle being an HV with OPNsense as a VM with only some Docker containers running along side it. Just wasn’t the case.

Once I went bare metal, though, performance and stability have been rock solid. I don’t think I’ve had to reboot it in over a month and the last time I did was only for some testing I was doing. I’ve yet to get 10gbps out of it, but that’s mostly due to acquiring a 10gig card for my NAS, which aren’t cheap. 2.5gb works excellently though.

I think you have the net.inet.rss.bits calculation incorrect. It’s bits in binary so it’s 2 to the power of 2. For example:

2^2 = 4

2^3 = 8

2^4 = 16

2^5 = 32

You also don’t need to set it since it is scaled to at least 2x the number of CPUs by default: https://github.com/freebsd/freebsd-src/blob/04682968c3f7993cf9c07d091c9411d38fb5540b/sys/net/rss_config.c#L90

That may be the case, I based that recommendation on this forum thread:

https://forum.opnsense.org/index.php?topic=24409.msg116941

It looks like you went direct to the source code. The forum thread seems to indicate that there was no current implementation for it at the time, maybe things have changed since that was posted in 2021.

Unfortunately these settings had absolutely no effect on resolving my problem. Thanks for this write up. I am definitely experiencing the same problem with the Comcast Gigabit Pro service, but I cannot figure it out.

In addition to your article, I also found this, which seems to indicate that there is a per core hardware limitation and there isn’t any way to get around it on a BSD system. I’m just not sure what to believe since you got it working on yours.

https://teklager.se/en/knowledge-base/apu2-1-gigabit-throughput-pfsense/

My OPNSense instance is running on a Proxmox hypervisor with an Intel E5-2650L v3, so 24 cores, and I did give 24 cores to the VM. Maybe some of our performance differences come down to hardware differences. I could have put a better and faster CPU in this build, but I wanted something relatively low power, and I thought this would be way overkill for a router, but it doesn’t seem to be overkill for OPNSense, lol.

I think a likely culprit is just bad FreeBSD compatibility with the Proxmox Virtio drivers, it would probably perform better on bare metal. I know I had to disable a lot of hardware offloading features to get things working right, and anecdotally, I see significantly higher CPU usage by processes named “vhost-####” (which apparently are Virtio related threads) on the hypervisor while the OPNSense VM is pushing 6Gbps of network traffic than when a comparable Debian VM is pushing 9Gbps of network traffic.

My Comcast Gigabit Pro was just upgraded this past week from X6 (6Gbps) to X10 (10Gbps), and I’m noticing that my OPNSense instance is having trouble going any faster than it was when I wrote this tuning article, but if I connect a Debian VM on the same hypervior directly to my WAN port and assign the public IP to that, I can not only get full speed, but the CPU on the hypervisor while running the 10Gbps speed test is only about 10%, as opposed to the about 50-60% CPU usage I am seeing during a ~6Gbps speed test through OPNSense.

As a result I am now considering abandoning my OPNSense instance and migrating to a Debian NAT box. I’ve got a test bench running so I can learn to do this (and all the complicated policy routing stuff I want my router to do) with NFTables, since my prior routers like this used iptables which is now on its way to deprecation. I probably won’t continue to use OPNSense since it seems unable to keep up with my current connection on my current hardware.

I did consider going back to running it bare metal, but the ease of use of the whole thing being on Proxmox is just too alluring. It’s easy to backup, easy to migrate to new hardware without reinstalling and setting up the router all over again, and easy to plug\unplug virtual networks and make test instances (like I am doing now with my test Debian NAT VM, which I’m currently running on my 1 gig ethernet circuit from Comcast so I have a real test environment without disturbing my real connection).

So right now, I think I’ll stick to a Proxmox virtual router environment, but I think I will be doing the Debian NAT VM instead. I may keep OPNSense running to provide other services, but once I set up a DHCP server on another VM, it may no longer be necessary to keep it running at all.

I’m still going to keep the article up, as I think it will work well for people on 1-5Gbps connections still, but since my connection is now 10Gbps I think I just have to acknowledge that OPNSense isn’t going to perform well enough for me anymore. I poured a lot of research into getting the performance that I did get out of it, and I don’t see myself squeezing any more out of it without some kind of major breakthrough.

Interesting that you weren’t able to get much above 6gbps. I finally had to ditch the R86S in favor of an HP T740. A little bigger, but definitely an upgrade after installing a 4port 10Gbe Intel NIC. Nice thing is that I was able to do what I originally intended, which was have a hypervisor running OPNsense as a VM. I use Unraid and not Proxmox, though. Proxmox is a lot of effort to get roll-your-own solutions working properly, I’ve found. Seems much better suited to industry standard hardware that’s built for the job.

Unraid also makes running containered applications dirt simple. So I moved whatever packages I was running in OPNsense away from it and now they’re running as containers on the same host. So far, everything seems alright.. except for latency.

It’s fine? I mean I ping 1.1.1.1 at about 4ms, but pings to google DNS (8.8.8.8) return something like 20ms consistently. I assumed upgrading to beefier hardware would help, but it’s pretty much the same. Interestingly, using an old Netgear Nighthawk, my pings to cloudflare and google were rarely more than 2ms. Same ISP, same location, just different router.

I also was only getting max 5gbps on a 10gbps pipe. What I found yesterday when playing around was that since I have 8 effective cores to play with, I bumped OPNsense’s VM to 7 cores (leaving core 0 free and pinned cores, not isolated, for anyone using Unraid) and now my speeds hover between 5Gbps to the max I’ve hit, 8Gbps. So definitely hardware makes a difference.

I’m going to try setting up the tuning you suggested in this article to see if I can push it further, but if the latency issue is still present when I’m playing a game with friends, I’m going to have to try something else. Looking at OpenWRT right now.

Yeah, I still think it was more of a case of a bad interaction between FreeBSD and Proxmox. My Debian VM running nftables is having no difficulties pushing about 9.7Gbps. I actually still have OPNSense running as my DHCP server, my incentive to replace it is rather low, everything is working fine and my “router hypervisor” has plenty of resources to keep it running. I may rebuild it eventually but I also know the configuration files will be more of a hassle to deal with than the GUI, I have a lot of DHCP static reservations.

I am a bit surprised to hear that you’re seeing major latency differences between different routers to some destination IPs. I’d investigate that further with MTR and see where the latency is originating from. The behavior you’re describing doesn’t make a lot of sense to me with the context as I’m interpreting it.

I -have- seen situations where your DNS server effects your ping to, for example google.com, because the DNS server resolves an IP for a Google server located geographically very far away from you. I have not seen a situation where latency to 8.8.8.8 or 1.1.1.1 is effected dramatically by the router. I am quite sure that both of those IPs are anycast IPs, and your ISP should always want to route you to the closest destination server for the IP. My guess is that your path taken to get there is changing for some reason. If your router were causing a 15ms latency increase, you should observe the increase to any destination host.

Ok, good to know. Being networking isn’t a strong point for me, I’ve made assumptions that could be pointing me in directions I don’t need to follow.

One thing I’ll point out is that originally, I had DNS resolve to OPNsense through Unbound based on a guide I followed that then had query forwarding set to point to AdGuard installed within OPNsense. When I set up AdGuard initially, it was installed as a plugin but after setting up this new host, I didn’t install the plugin in OPNsense, running AdGuard as a container instead. However, I didn’t change the query forwarding portion, other than to point to AdGuard on its own IP.

I now think that was a mistake from the outset. I should’ve just had AdGuard reside somewhere else, not as a plugin, but also setting it up with query forwarding seems like the wrong idea. I think OPNsense was probably doing more work than was needed and for little benefit, at least in my use case, which is a private home network.

After some tuning, disabling query fowarding, and letting AdGuard handle DNS and using Unbound to manage internal DNS queries, I did notice a marked improvement in name resolutions so overall effect is everything feels snappier. However, latency hasn’t really changed.

Not sure why the router would make a difference with ping times either, but it definitely does. I haven’t been able to test out hosting with the one game I play with friends just because of scheduling issues, but if moving DNS off of OPNsense helps it, then I’ll be happy.. though also very confused because clearly I’m missing some fundmental truth about how DNS works..

I’m not able to think of any reason why latency would be so dramatically effected either. I would install MTR and run a test to the same IP address, then swap the router and do the same. This will show you if any difference exists and where along the route that difference is originating.

MTR is available on pretty much any operating system, I found a guide here to help you get it going on Windows, Mac, or Linux since I’m not sure which platform you’re using: https://chemicloud.com/kb/article/how-to-perform-a-mtr-in-windows-mac-os-and-linux/

Run the MTR test on each router to the same destination IP (like 8.8.8.8, 1.1.1.1, etc) and try to leave it running for at least a minute or two, the longer it’s running the more aggregated data it will collect and the more precise\accurate your result is likely to be.

If you’re not sure how to interpret the MTR results, you can just post them here and I’ll look at them.

So I bit the bullet and switched to OpenWRT last night. I was just having a bunch of performance issues with OPNsense and it was causing high CPU usage on the host and spinning up the fan constantly.

Before I did, I set up MTR within OPNsense and ran a quick test (thank you, MTR feels magical, like one of those tools everyone who knows is aware of but doesn’t really talk about).

I didn’t run lengthy tests, was just getting a quick feel for it and was configuring OWRT at the same time, but what I got before I shut down the VM may be of some interest.

root@OPN-FW-01:~ # mtr 8.8.8.8 -r

Start: 2023-08-08T14:00:17-0700

HOST: OPN-FW-01.home Loss% Snt Last Avg Best Wrst StDev

1.|– lo0.bras1.okldca11.sonic. 0.0% 10 1.4 1.5 1.0 2.9 0.5

2.|– 301.irb.cr2.okldca11.soni 0.0% 10 5.8 7.2 3.2 19.7 5.0

3.|– 0.ae3.cr2.hywrca01.sonic. 0.0% 10 1380. 328.8 15.7 1380. 427.9

4.|– ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

5.|– ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

6.|– 100.ae1.nrd1.equinix-sj.s 0.0% 10 2.9 9.1 2.3 68.1 20.7

7.|– google.159.ae1.nrd1.equin 0.0% 10 2.8 3.0 2.8 3.3 0.2

8.|– 142.251.69.89 0.0% 10 6.8 5.3 4.5 6.8 0.7

9.|– 142.251.228.83 0.0% 10 3.1 3.0 2.8 3.1 0.1

10.|– dns.google 0.0% 10 2.8 3.0 2.8 3.1 0.1

root@OPN-FW-01:~ # mtr 1.1.1.1 -r

Start: 2023-08-08T14:01:19-0700

HOST: OPN-FW-01.home Loss% Snt Last Avg Best Wrst StDev

1.|– lo0.bras1.okldca11.sonic. 0.0% 10 1.2 1.9 0.5 7.2 1.9

2.|– 300.irb.cr1.okldca11.soni 0.0% 10 6.1 14.0 1.1 46.4 14.6

3.|– 0.ae2.cr2.rcmdca11.sonic. 0.0% 10 15.2 171.2 13.9 523.0 160.3

4.|– 0.ae2.cr1.rcmdca11.sonic. 80.0% 10 7569. 7656. 7569. 7743. 122.8

5.|– 0.ae1.cr6.rcmdca11.sonic. 90.0% 10 4271. 4271. 4271. 4271. 0.0

6.|– ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

7.|– ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

8.|– 100.ae1.nrd1.equinix-sj.s 0.0% 10 2.8 29.6 2.7 96.1 38.1

9.|– 51.ae3.cr2.snjsca11.sonic 0.0% 10 4.0 4.8 3.2 14.1 3.4

10.|– 172.71.152.4 0.0% 10 3.3 6.6 3.1 19.1 4.9

11.|– one.one.one.one 0.0% 10 3.2 3.5 2.6 8.1 1.6

Now that I have both VMs configured and DHCP/DNS running on separate servers outside of them, should be just a simple matter of passing the NICs back and forth between the two VMs after shutdown and test more thoroughly. I still have the old Nighthawk configured too, since it might be interesting to see what I get from that.

Really appreciate your help and interest in this.

Thanks for this! I changed my two vNICs in proxmox from vmnet3 to VirtIO and increased the multiqueue to 12(the amount of cores assigned to the vm.) My speed went from 200mbps to the full 1gbps, which is what I’m subscribed.

I thank you for putting in the effort. I was at the end of the rope and losing all hope. Unfortunately, in my case, none of these settings helped me. It is likely that my setup is different.

However, there was one setting that completely changed things for me. On my 10Gbps network, I went from not being able to get anything higher than 3.20Gbps to getting 9.9Gbps.

It was the MTU. I changed the MTU on my LAN and WAN interface in pfsense to match the MTU everywhere else on my network for 10Gbps interfaces. I changed the default 1500 bytes to 9000. And like magic, it worked!

The setting can be navigated to by going to Interfaces and then LAN or WAN. MTU is about the 6th setting on that page.

I wanted to share this information here for anyone who is also at the end of their rope and want to try one more thing.

Is net.inet.tcp.mssdflt=1240 correct? This setting essentially implements MSS clamping. If you’re using Ethernet, setting it to 1240 would result in packet fragmentation, potentially causing the described gaming issues. Ideally, it should be set to 1500 (Ethernet MTU) minus 20 (IPv4 header) to yield 1460. Adjust accordingly for jumbo frames

This is the problem he ran into. He didn’t disable interface scrub in Firewall Settings – Normalization. Search for the calomel article with net.inet.tcp.mssdflt. He has a very detailed explaination, but at the end has a specific note:

“FYI: PF with an outgoing scrub rule will re-package the packet using an MTU of 1460 by default, thus overriding the mssdflt setting wasting CPU time and adding latency.”

My large list of tunables includes:

net.inet.tcp.mssdflt=1240

which also requires:

net.inet.tcp.abc_l_var=52

net.inet.tcp.initcwnd_segments=52

I get my full line rate of 2.5Gbps at low CPU, I will test again when I get 5 or 10Gbps WAN.

Why did you select 4 sockets? Do you really have 4 dedicated CPU sockets on your motherboard (aka 4 real physical CPUs)? This is rare and very expensive hardware most often. Are you sure you have 4 sockets with 24 cores on each CPU?

If not, and I assume you only have 1 physical CPU on your machine, set the sockets to ‘1’ and disable NUMA.

At that point in time, I was unaware that there could be any performance impact to my configuration, I don’t believe the number of cores was exceeded, which at the time was all I thought was significant.

Hi Kirk,

I just wanted to say thank you – that this is an impressive guide. I used some of these tunables to try and resolve my inter-vlan bottlenecking issues with 2.5 Gbe in OPNsense. I’m still at a loss. I have an i225 NIC installed for my LAN. OPNsense is installed on bare metal. I often see iperf speeds reach ~2.34 Gb/s and then drop to ~600 Mb/s. I can also invoke this behavior by rapidly starting/restarting iperf transfers.

I am thinking there are still simply issues/bugginess between the i225s and Free BSD. Any guidance would be appreciated, as it seems you really know your stuff

I’m glad you found the guide useful!

My Internet has actually been upgraded to 10Gbps since I wrote this guide, and I didn’t find a way to get OPNsense up to speed with that. I ended up abandoning the BSD based router distributions and went back to a time tested method of mine of running a router on a Debian based system (which I was able to virtualize in Proxmox and maintain the 10Gbps performance easily).

At the point I threw in the towel on this, I somewhat concluded that FreeBSD’s drivers must just be behind the times for these multi-gigabit use cases. These router distributions are the only place I have really used BSD in the last 10 years. Setting up a router on a Debian VM solved my performance issues in the end, and I didn’t really mind giving up the web panel GUI that much.

I see. It’s really unfortunate since OPNsense is such a nice project, not to mention a powerful one given its open source status. I went as far as ordering another NIC to try out. We’ll see if that yields any help. The main thing will be finding the time to do it haha thanks again